It is absurd for businesses to treat all customers equally

(this is the Day 2 recap of my weeklong Marketing Academy training - a collaboration between Google and Wharton)

We heard from 2 professors today: Pete Fader on the topics of Customer Centricity and Lifetime Value, and Elea McDonnell Feit on running Business Experiments

Key Takeaways

- It is absurd for businesses to treat all customers equally

- Focus most of your resources on customers with the highest Customer Lifetime Value (CLV) but hedge with the long-tail

- Be crystal clear whether a new program is aimed at retaining customers or developing them

- When conducting a marketing experiment, always remember that randomization will set you free

- If you find that the costs of conducting the experiment will outweigh the benefits, don’t run the experiment

We do awesome things for (awe)some people

Right off the bat, Professor Fader made a rather bold statement: companies should never aim to please all their customers simply because the return on investment would be extremely low.

Instead, we should acknowledge the differences between customer segments and take advantage of that difference (in a good way…of course).

In the world of “Customer Development” (more on this in a little bit), since it’s very rare for “ugly ducklings” (i.e. low spend customers) to turn into “beautiful swans” (loyal, die-hard fans), it’s absurd to treat all customers in the same way

So who should we focus on?

The concept of Customer Centricity helps us answer this question since Customer Centricity is about finding a SELECT SET of customers that maximize their long-term financial value to the firm

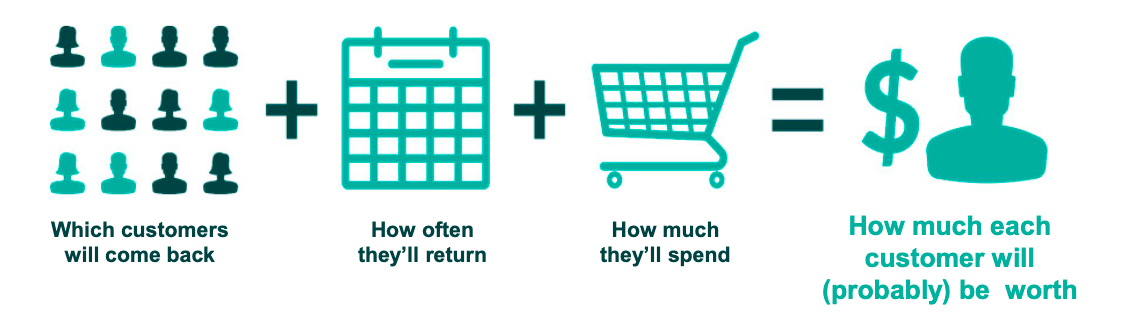

Back to the concept of Customer Lifetime Value (CLV) we talked about on Day 1, Professor Fader defines it as:

- CLV = A prediction of each customer’s profitability over their entire (past and future) relationship

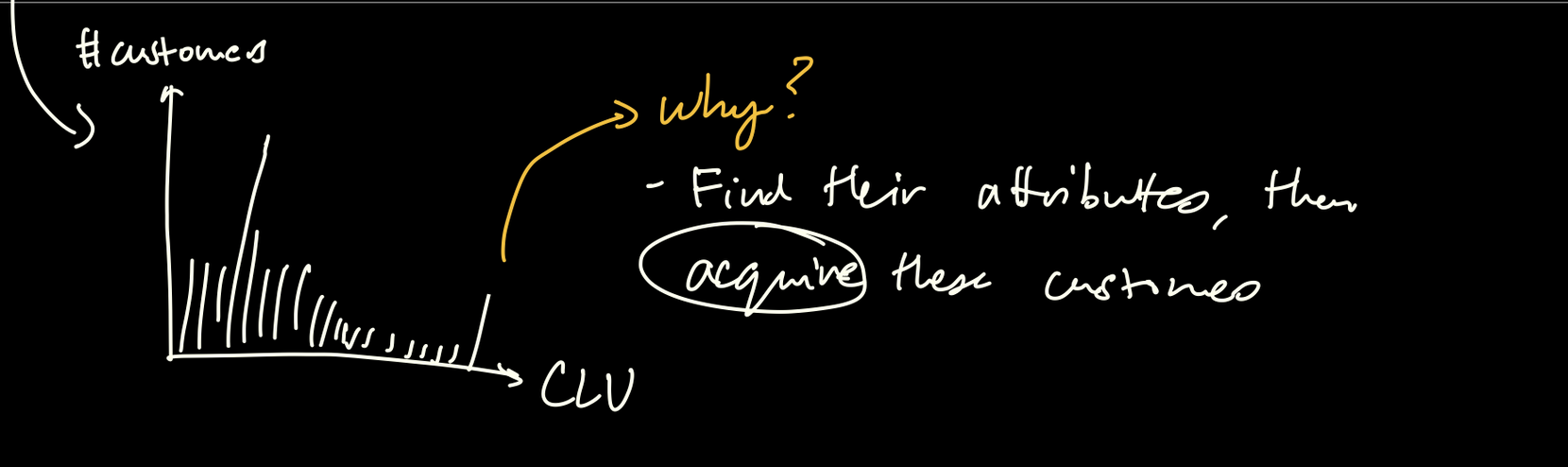

Basically, find the customers with the highest CLV, figure out what makes them have high CLV (i.e. what are their attributes?) then acquire them!

Simple. Right?

The Paradox of Customer Centricity

The problem is: the more a company focuses on the high CLV clients, the more they need a solid base of customers to stabilize the overall mix (after all, what if a large customer suddenly leaves).

Non-focal customers, therefore, should not be ignored. Instead, these customers establish a solid foundation for the business. For example their stable cashflow can be used to invest in retention or development programs.

Speaking of retention and development…

The Changing Nature of Retention

First, there are 3 building blocks of Customer Centricity:

- Acquisition

- Retention

- Development

Between (1) and (2) If you have to choose between acquiring and retaining, retaining wins in importance every time

Examples of Development

At McDonald’s, if you hear “You want fries with that?” → That’s an example of cross-selling

If you hear “You want to supersize that?” → That’s an example of upselling

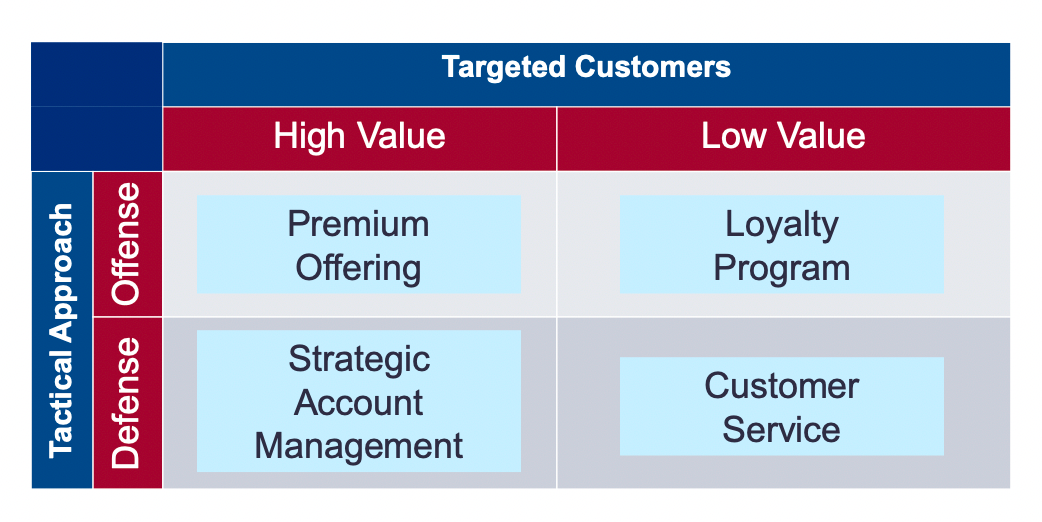

So what are some of the tactics when it comes to acquiring, retaining, and developing?

We can use a matrix of high vs. low-value customers, and an offensive-defensive approach:

7 Questions to ask BEFORE running a Marketing experiment:

- What is the business question the experiment will answer? What decision are you trying to inform?

- What marketing treatments will you test? How do the treatments relate to the business question?

- What setting will you run the test in? How close is this to the setting where you want to apply the findings? Is this a lab or a field test?

- What response measure(s) will you use to compare treatments? Be sure to specify one key performance indicator (KPI). Make sure you consider time.

- What is the unit of analysis? At what level are you assigning treatments and measuring responses?

- How will subjects be selected for inclusion in the test?

- How will treatments be assigned to subjects?

Reflection from Jeff

Last year, I ran a pilot program at work that was basically a marketing experiment. The premise was we should work with creative partners to train our clients on ad creatives.

Although the pilot turned out great, I can 100% see how if I had went through these 7 questions, the experiment would have been more structured and the results might have been (even) better.

For example, while I had clear KPIs for the pilot program (% increase in click-through-rates for the new ads), I didn’t think much about the settings in which the pilot would be run in (lab test = low real-world validity and testing with real clients = high real-world validity but risky since the clients might end up with worse ads).

Great food for thought for next time!

Best practices for running Marketing experiments

(note: the bulk of this session was dedicated to a real-time case study whereby we were split into breakout groups and tasked with running a marketing experiment for canned soup brand, so total academic content was a little light)

Don’t do tests that cost more than the potential gains of making a bad decision

While this sounds extremely obvious when we say it out loud, our egos often get in the way. As humans, we find it hard to stop a course of action once we have committed to said action. So it’s important to take a step back and objectively review the progress so far and ask “if we continue and find X, is it still worth it at this point?”

Be prepared to find no effect

This is more of a real-world implication: no matter how much prep you do for the experiment, and no matter how sure you are that the results will be “life-changing,” we have to be ready to accept any and all potential results of an experiment.

At work, I once ran another pilot that seemed meaningful for a small group of clients (5 total), but when we tried to scale it out to a larger group (~100), we saw minimal impact overall so we made the difficult decision to end the program so we can invest the marketing budget on other initiatives.

It was a tough pill for me to swallow (since I drove it from the beginning) but this is a prime example of where data-driven decisions outperform vs. human emotions.

See you in the next Debrief!

Got feedback for this edition of The Debrief? Let me know!

Thanks for being a subscriber, and have a great one!