DeepSeek: What Actually Matters (for the everyday user)

Hey friends - In this comprehensive guide, I break down the reality behind DeepSeek's recent developments, separating fact from fiction.

You'll learn what DeepSeek's breakthroughs actually mean for everyday AI users, how to safely access advanced AI features for free, and make informed decisions about incorporating DeepSeek into your workflow.

Watch it in action

Resources

- My free AI Toolkit

- The Notion page presented in the video

- Ben Thompson's DeepSeek FAQ analysis on Stratechery

Key Takeaways

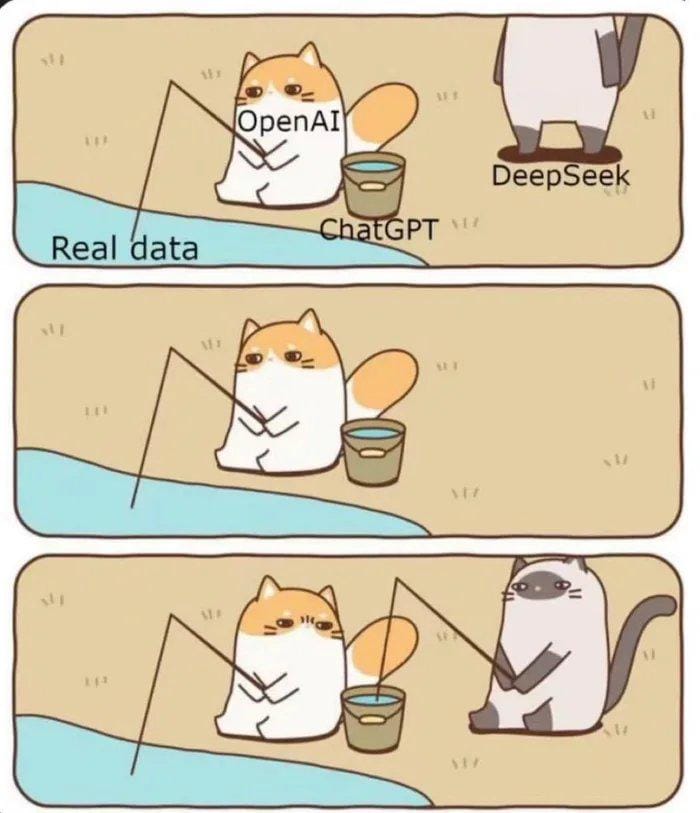

- DeepSeek's reported $5.6M model training cost is misleading - their true investment including infrastructure is closer to $1B, highlighting the significant resources still required for AI development

- While DeepSeek matches OpenAI's o1 model performance, they've achieved this through efficiency optimization rather than breakthrough capabilities - think of it as getting 90% of flagship performance at 20% of the cost

- You can safely access DeepSeek's capabilities through US-based platforms like Perplexity and Venice.AI, or run models locally, avoiding data privacy concerns while still benefiting from their innovations

The Real Story Behind DeepSeek's Cost

DeepSeek's widely reported $5.6M model training cost has generated significant buzz, but this number is misleading. While that figure represents the final training run, it excludes critical infrastructure costs.

The company reportedly owns 50,000 Hopper GPUs worth approximately $1B, revealing the true scale of investment required for AI development.

Innovation Within Constraints

Contrary to speculation, DeepSeek's achievements came through optimization rather than rule-breaking.

They specifically designed their model architecture around the memory bandwidth limitations of H800 GPUs, which were allowed under export controls at the time.

Performance vs. Efficiency

DeepSeek hasn't necessarily surpassed OpenAI - they've approached the challenge differently. Their reasoning model R1 matches OpenAI's o1 performance, but OpenAI has already demonstrated more advanced capabilities with o3. The key distinction is that DeepSeek leads in efficiency optimization rather than raw capability.

Understanding Model Comparisons

When evaluating DeepSeek's impact, it's crucial to compare similar model types:

- Base language models: DeepSeek V3 vs ChatGPT 4o

- Reasoning models: DeepSeek R1 vs ChatGPT o1 or o3

The UI Innovation Myth

DeepSeek R1's visible chain of thought, while popular with users, represents a UI choice rather than a technical breakthrough. Both R1 and OpenAI's o1 possess similar reasoning capabilities - DeepSeek simply chose to make the thinking process visible to users.

The Distillation Factor

Evidence suggests DeepSeek likely benefited from model distillation, training on outputs from existing models like ChatGPT. While this practice breaks terms of service, it's not illegal and represents a common approach in AI development.

Using DeepSeek Safely

For those concerned about data privacy, three options exist:

- Use US-based platforms like Perplexity and Venice.AI

- Run models locally through applications like ollama or LM Studio

- Avoid the native DeepSeek web and mobile apps

Impact on Technology Companies

Rather than spelling doom for NVIDIA or US tech companies, DeepSeek's efficiency improvements could increase overall AI demand through Jevon's Paradox. Companies like Amazon AWS, Apple, and Meta stand to benefit from cheaper, more accessible AI capabilities.

Making Smart Choices

Before switching to DeepSeek-powered applications, consider the switching costs and your specific needs. If you already use and pay for ChatGPT and value privacy, the benefits may not outweigh the costs of changing platforms.

If you enjoyed this

Check out my video on how you can become AI-native this year without learning how to code.