AI Agents for Curious Beginners

Most explanations of AI Agents are either too technical or too basic. This article is designed specifically for those with zero technical background who regularly use AI tools and want to understand just enough about AI Agents to see how they might impact your daily life and work.

Watch it in action

Resources

- Download my free AI Toolkit

- Helena Liu's AI workflow tutorial

- Full Andrew Ng demo video

Key Takeaways

- Large Language Models (LLMs) like ChatGPT are passive systems that generate outputs based on inputs but can't access external information or take autonomous actions.

- AI Workflows combine LLMs with external tools (like calendar access or weather data) but follow rigid, predefined paths set by humans who remain the decision-makers.

- AI Agents represent the next level where the LLM itself becomes the decision-maker, autonomously reasoning about problems, selecting and using appropriate tools, and iterating until goals are achieved.

Level 1: Understanding Large Language Models

Large Language Models form the foundation of popular AI chatbots like ChatGPT, Google Gemini, and Claude. These are applications built on top of LLMs that excel at generating and editing text.

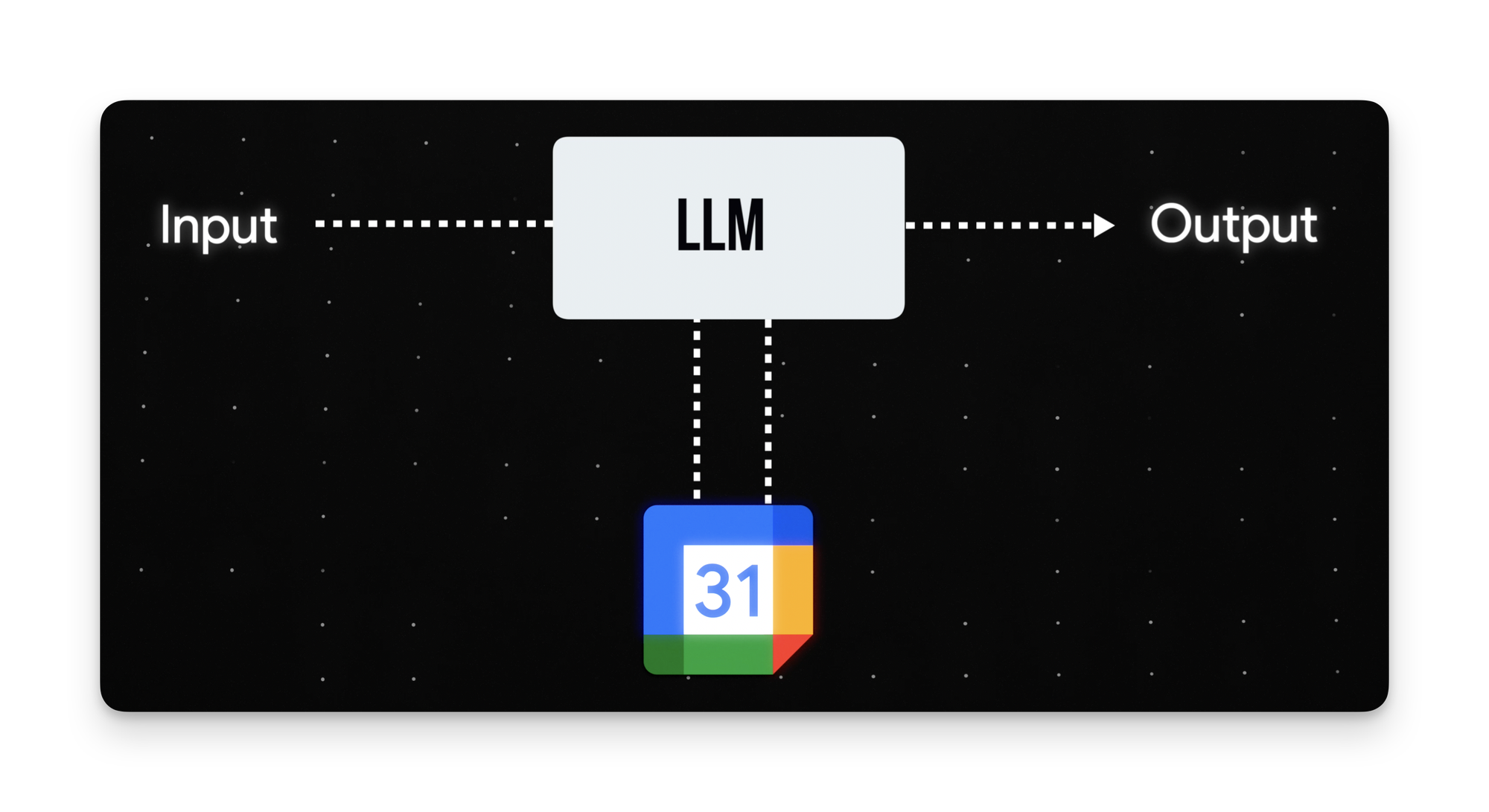

The basic functionality is straightforward: you (the human) provide an input, and the LLM produces an output based on its training data.

- For example, if I ask ChatGPT to draft an email requesting a coffee chat, my prompt serves as the input, and the resulting email—typically more polite than I would naturally write—is the output.

However, LLMs have two important limitations

- Despite being trained on vast amounts of data, they have limited knowledge of proprietary information, such as your personal calendar or internal company data.

- LLMs are passive—they wait for your prompt and then respond.

These limitations become apparent when asking about personal information. If I ask ChatGPT when my next coffee chat is scheduled, it simply doesn't have access to my calendar to answer correctly.

Level 2: AI Workflows

AI Workflows build upon basic LLMs by adding predefined paths to external information and tools.

Imagine if we instructed an LLM: "Every time I ask about a personal event, perform a search query and fetch data from my Google Calendar before providing a response." With this instruction implemented, the next time I ask, "When is my coffee chat with Elon Husky?" I'll get the correct answer because the LLM will first check my Google Calendar.

But here's where the limitations become apparent: If my next question is "What will the weather be like that day?" the system will fail because the path we defined only includes accessing Google Calendar, which doesn't contain weather information.

This highlights the fundamental trait of AI workflows: they can only follow predefined paths set by humans. In technical terms, this path is called the "Control Logic."

Real-World Example: Content Creation Workflow

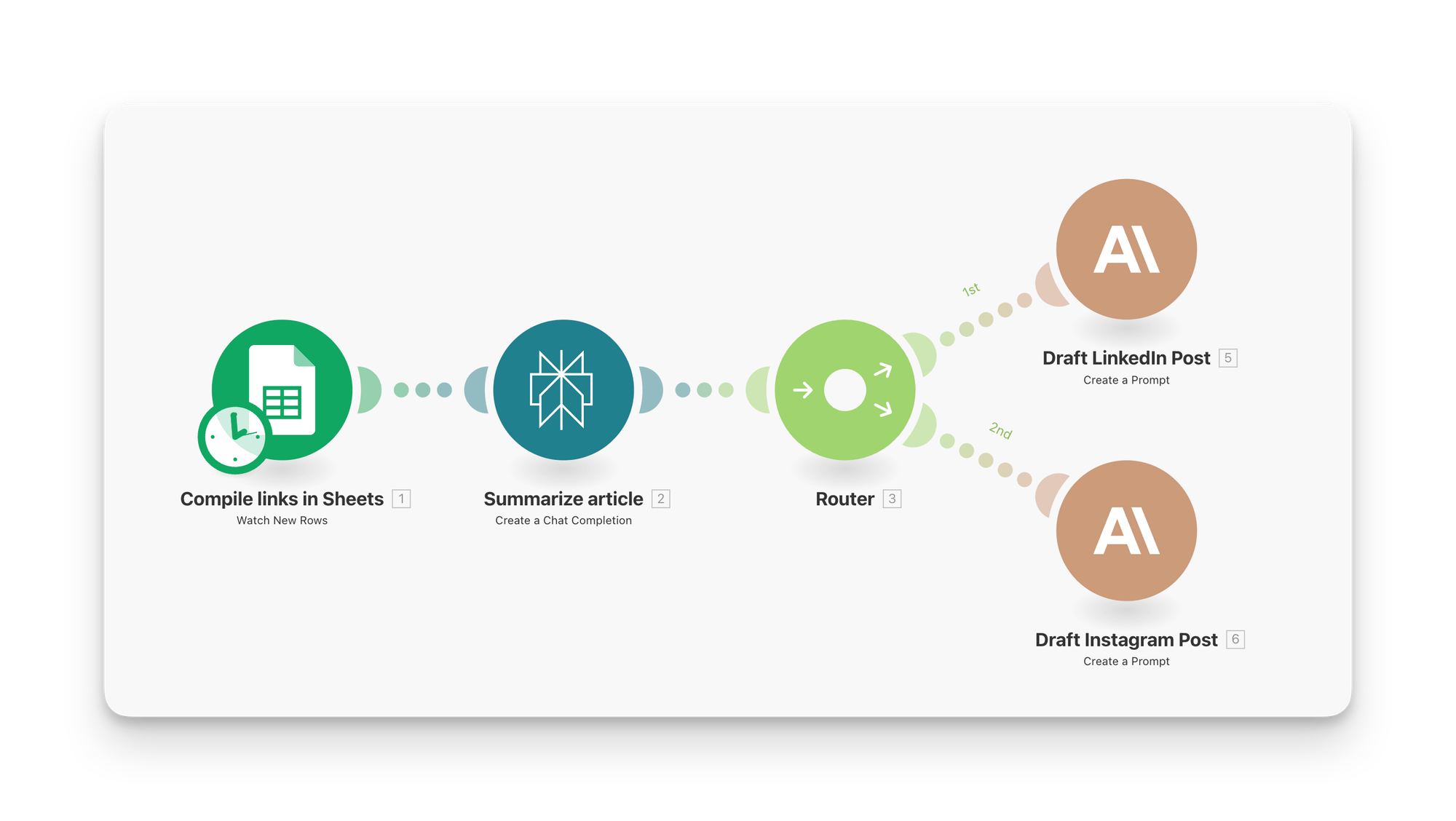

Following Helena Liu's tutorial, I created a simple AI workflow using make.com that:

- Compiles news articles in Google Sheets

- Uses Perplexity to summarize the articles

- Employs Claude, with my custom prompt, to draft LinkedIn and Instagram posts based on those summaries

- Schedules this sequence to run daily at 8 AM

This qualifies as an AI workflow because it follows a predefined path I established: Step 1, Step 2, Step 3, run daily at 8 AM.

Importantly, if I test this workflow and don't like the final LinkedIn post—perhaps it's not funny enough—I must manually rewrite the prompt for Claude. The trial-and-error iteration is performed by me, a human.

Level 3: True AI Agents Take Control

The massive change that transforms an AI workflow into an "AI Agent" is replacing the human decision-maker with an LLM. Continuing our content creation example, let's examine what I've been doing as the human decision-maker:

- Reasoning about the best approach: compiling articles, summarizing them, then writing the final posts.

- Taking action using tools: inserting links in Google Sheets, using Perplexity for summarization, and Claude for copywriting.

For this to become an AI Agent, the LLM must:

- Reason autonomously: "What's the most efficient way to compile news articles? Should I copy and paste each article into a word document? No. It's probably easier to compile links to those articles and use another tool to fetch the data. Yes, that makes more sense."

- Act via tools: "Should I use Microsoft Word to compile links? No, inserting links directly into rows is more efficient. What about Excel? No, the user has already connected their Google account with make.com, so Google Sheets is the better option."

A third key trait of AI Agents is their ability to iterate independently. Remember when I had to manually rewrite prompts to make the LinkedIn post funnier? An AI Agent would automate this process by:

- Drafting v1 of a LinkedIn post

- Adding another LLM to critique the output based on LinkedIn best practices

- Repeating until the best practices criteria are met

- Delivering the final, optimized output

Real-World Example: Video Content Analysis

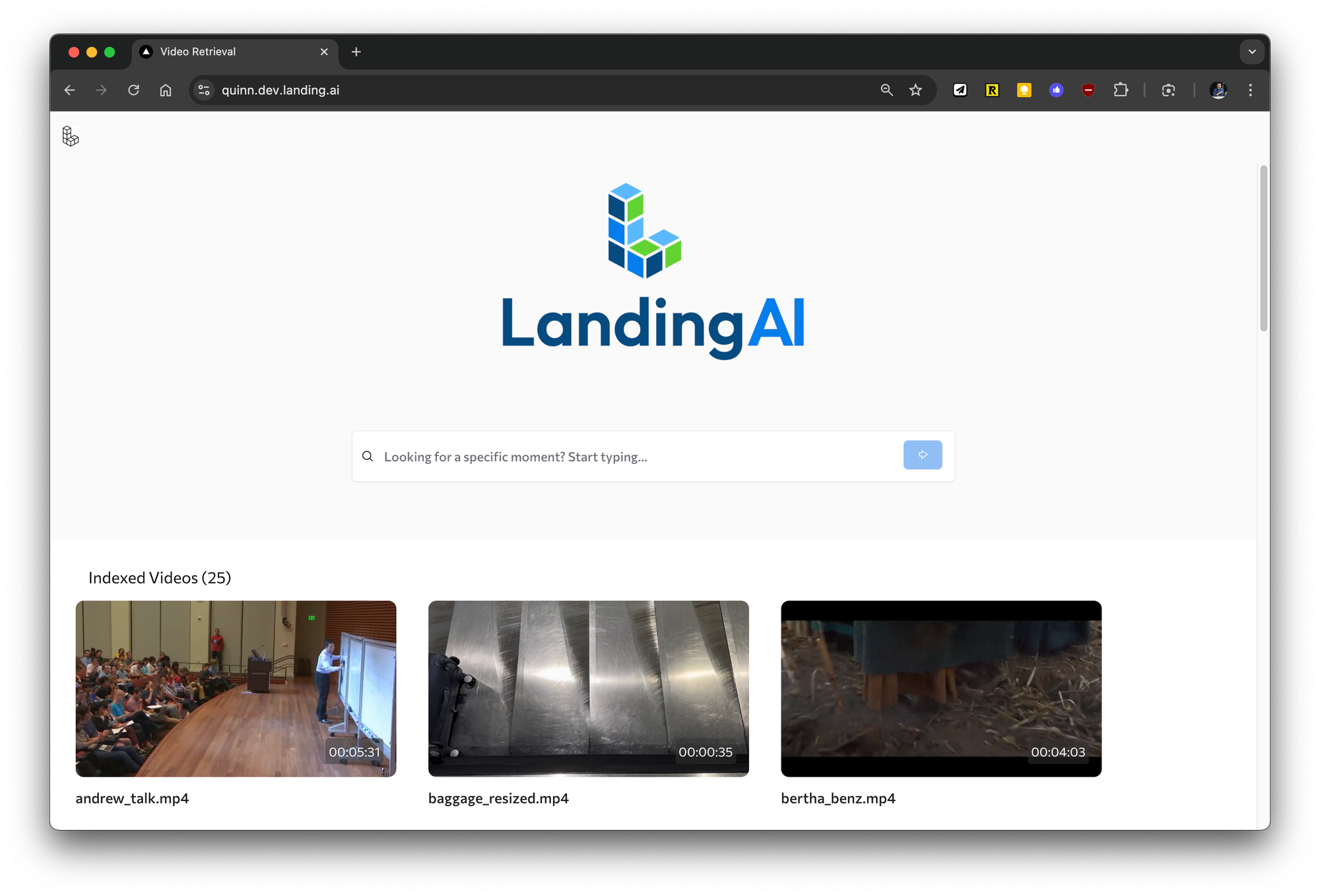

Andrew Ng, a leading figure in AI, created a demo website that illustrates how an AI agent works. When you type a keyword like "skier," an AI Vision Agent:

- Reasons about what a skier looks like (a person moving around in snow)

- Acts by searching through video footage for matching clips

- Indexes those clips

- Returns relevant results

Though this may not seem impressive at first glance, remember that an AI agent performed all these tasks instead of a human who would otherwise need to review footage, identify skiers, and manually add tags like "snow," "ski," and "mountain."

Summary: The Three Levels of AI

To summarize the three levels we've covered:

- Level 1 - Large Language Models: You provide an input, and the LLM responds with an output based on its training.

- Level 2 - AI Workflows: You provide an input AND instruct the LLM to follow a predefined path that may involve retrieving information from external tools. The key trait is that humans program and determine the path.

- Level 3 - AI Agents: The AI agent receives a goal, and the LLM performs reasoning to determine the best approach, takes action using tools, observes interim results, decides whether iterations are needed, and produces a final output that achieves the initial goal. The key trait is that the LLM becomes the decision-maker in the workflow.

If you enjoyed this

If you found this explanation helpful, you might want to check out my tutorial on building a prompts database in Notion to organize and optimize your AI interactions.